Overview

Azure Blob Storage is a highly scalable object storage solution provided by Microsoft Azure. It's commonly used to store large amounts of unstructured data, including JSON files. Integrating Azure Blob Storage with a SQL Database to read JSON data and store it in a structured format can be a powerful way to leverage the flexibility of JSON and the robustness of relational databases. This blog will guide you through the process of reading direct JSON file content from Azure Blob Storage and inserting it into a SQL Database.

These days, working with CSV or JSON data is becoming more and more widespread.Sometimes, especially when debugging or developing a new feature, I need to access that JSON data, before is sent to any further microservices for processing or, after that, being stored in the database.

Considering how often I work with SQL. Therefore, it would be incredibly helpful if it were possible to query JSON directly from T-SQL, eliminating the requirement to download the file from the Azure Blob Store. This will simplify and increase the efficiency of my work.

I would really like to have access to JSON wherever it is, exactly like you do with Hadoop or Azure Data Lake.

Well, you can. I just find out that with the latest additions (added since SQL Server 2017 and already available on Azure SQL v 12.0.2000.8) it is incredibly easy.

PreRequisites

Before we dive in, make sure you have the following:

- Azure Subscription: An active Azure subscription.

- Azure Blob Storage Account: A storage account with containers and JSON files.

Step-by-Step Guide

1. Set Up Your Azure Blob Storage

- Create a Blob Container: Navigate to your Azure Storage Account and create a blob container.

- Upload JSON Files: Upload your JSON files to the container. Ensure the JSON structure is consistent and ready for processing.

To see the JSON file content, click me 🙋

2. Configure SQL Database

- Create Database: If you haven't already, create an SQL Database.

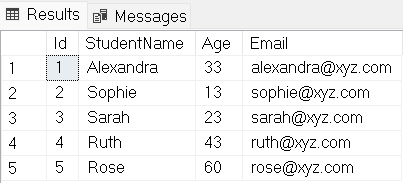

- Define Schema: Design the schema in your SQL Database to match the structure of your JSON data. For example:

First of all the Shared Access Signature needs to be created to allow SQL to access the Azure Blob Store where you have your JSON files. This can be done using the Azure Portal, from the Azure Storage Explorer.

Once you have the signature a Database Scoped Credential that points to the created Shared Access Signature needs to be created too:

You will be prompted, if you haven't already, to create a database master key before you can execute the previous operation.

After creating the credentials, build an External Data Source and point it to the Azure Blob Account containing your JSON files.

After that, you can use OPENROWSET and OPENJSON to begin experimenting with JSON files:

Output

Is it possible to get the code? Absolutely, click me to get the gist of it🙋

I hope it was enjoyable for you to read the content. But with these, we can only read the contents of one file at a time. In a later blog post, I'll demonstrate how to use Azure Synapse to read many files at once.